無効な入力です。特殊文字はサポートされていません。

Intel®、Micron™、Supermicro®は、STAC-M3™ベンチマークで驚くべき25項目もの世界記録を達成したことを発表いたします。STAC-M3は、大規模な時系列市場データ(「ティックデータ」)を管理するデータベースのソフトウェアとハードウェアのスタックを対象とする、業界標準の金融企業ティック分析ベンチマークのセットです。テストはベースラインテスト(Antuco)とより大規模なテスト(Kanaga)で構成されます。

Intel 6767P Xeon 6プロセッサー、Micron 9550 NVMe™ SSD、Micron 128GB DDR5レジスタードメモリ、そしてSupermicro SSG-222B-NE3X24R Petascaleサーバーをベースとした記録破りのソリューションが、公開されているすべてのレポートを上回るパフォーマンスを達成するとともに、次の25件の新パフォーマンス記録を樹立しました。

- Kanagaの平均応答時間ベンチマーク24件のうち19件(50ユーザーおよび100ユーザーの10件すべてを含む)

- Kanagaのスループットベンチマーク5件のうち3件

- Antucoの50ユーザーおよび100ユーザーベンチマーク3件すべて

このブログでは、KanagaとAntucoでの最高規模のテストにおいて、過去の記録保持システムよりもはるかに少ないハードウェアで驚くべきパフォーマンスを達成したことの実証に重点を置いています。

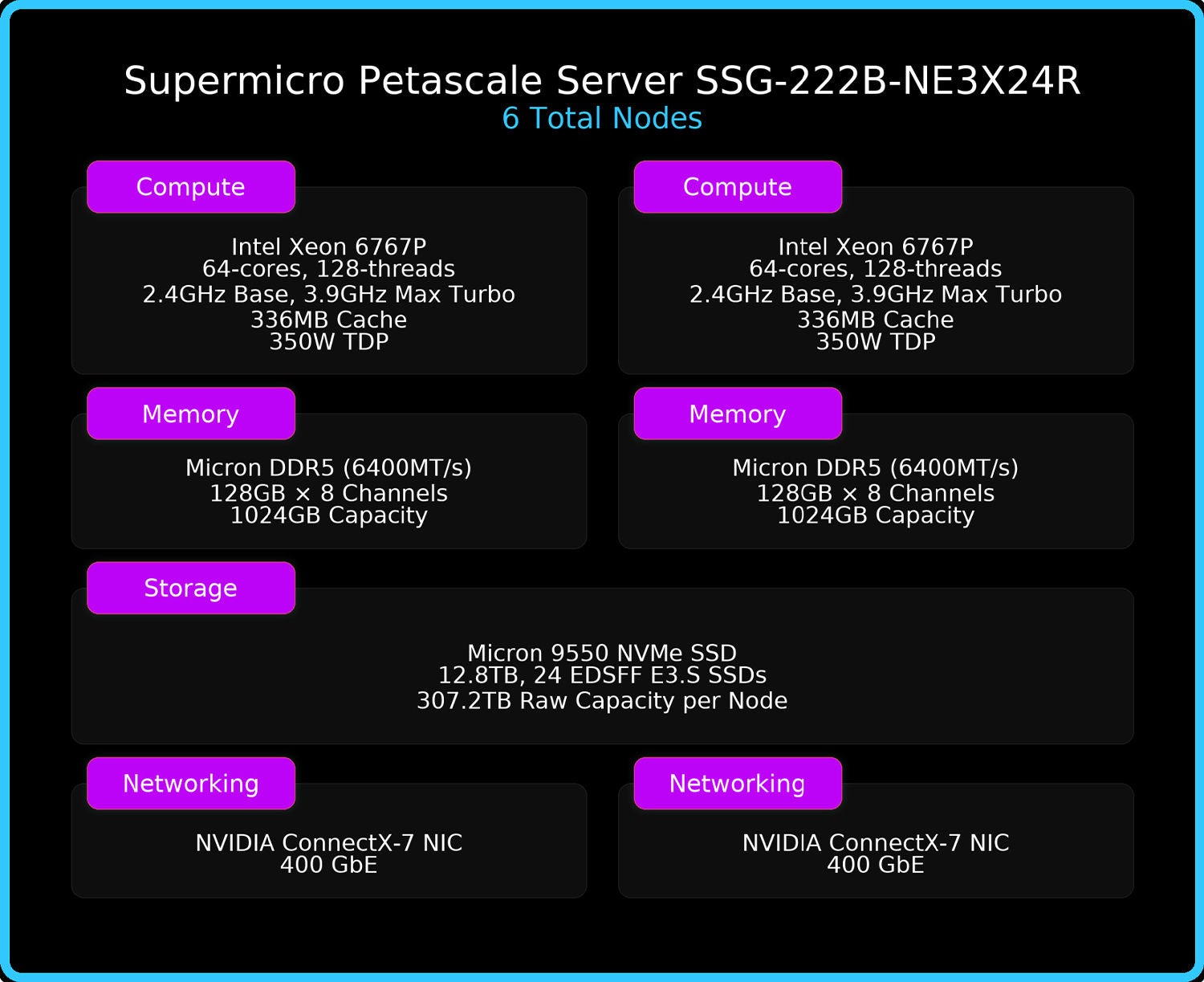

システムアーキテクチャー

結果を見る前に、テストを受けたシステムを見てみましょう。

400GbEでクラスター化されたSupermicro SSG-222B-NE3X24Rペタスケールサーバー6台を使用しました。各サーバーは以下の機能を備えています。

- 2基のIntel Xeon 6767Pプロセッサー(各64コア)、ノードあたり128コアのコンピューティング、合計768コア

- 16基の128GB Micron DDR5 DRAM、ノードあたり2TBのメモリ、合計12TB

- 24基の12.8TB Micron 9550 NVMe SSD、ノードあたり307.2TBのストレージ、合計1,843TB

- 2基の400GbE ConnectX-7 SmartNIC、ノードあたり100GB/秒のネットワークスループット、合計600GB/秒

このソリューションは、KXソフトウェアのkdb+ 4.1とkdb+用のSTAC-M3パックを使用して実行します。

STAC-M3ベンチマークスイート

時系列データ(ティックバイティックや取引履歴など)の分析は、アルゴリズム開発からリスク管理にわたる、さまざまな業務に不可欠です。しかし、自動取引、特に高頻度取引戦略が市場を席巻する現在では、そのような分析の重要性が増すとともに、分析が複雑になっています。取引ロボットはマイクロ秒、あるいはそれ未満のレベルで互いに競い合い、提示価格や取引量がこれまで以上に膨大に増えています。そのため、こうしたアクティビティを効率的かつ迅速に保存・分析できるテクノロジーがますます重要になっています。

STACベンチマーク評議会は、新興の革新的なソフトウェア、クラウド、ハードウェアによって、市場時系列(「ティック」)データの保存、取得、分析におけるパフォーマンスがどの程度向上するかを定量化する共通の基盤を提供するために、STAC-M3ベンチマークを開発しました。

STAC-M3は、ワークロードの規模に基づいて、次の2つのベンチマークスイートに分けられます。

- Antuco:単一ノードのパフォーマンスを測定するために設計されたより小規模なベンチマーク

- Kanaga:大規模なハードウェア展開に重点を置く、Antucoのスケールアップ版

STAC-M3レポートには、ベンチマークスイートとすべてのテストの詳細な結果が含まれていますが、このブログでは最大データセットサイズでのKanagaの結果に焦点を当てます。

STAC-M3は、小規模なデータセットから開始し、5年間にわたり毎年データセットサイズの1.6倍の係数を用いてデータセットサイズを拡大することで、データセットサイズをスケールアップします。「Year 5」(5年目の)データセットはテスト対象データセットの中で最大の規模であり、ソリューションにとって処理が最も困難なデータセットです。STAC-M3ベンチマークでは、テスト結果の測定単位としてミリ秒単位のレイテンシーを使用しているため、値が小さいほど性能が高いことを示します。

ソリューションの比較

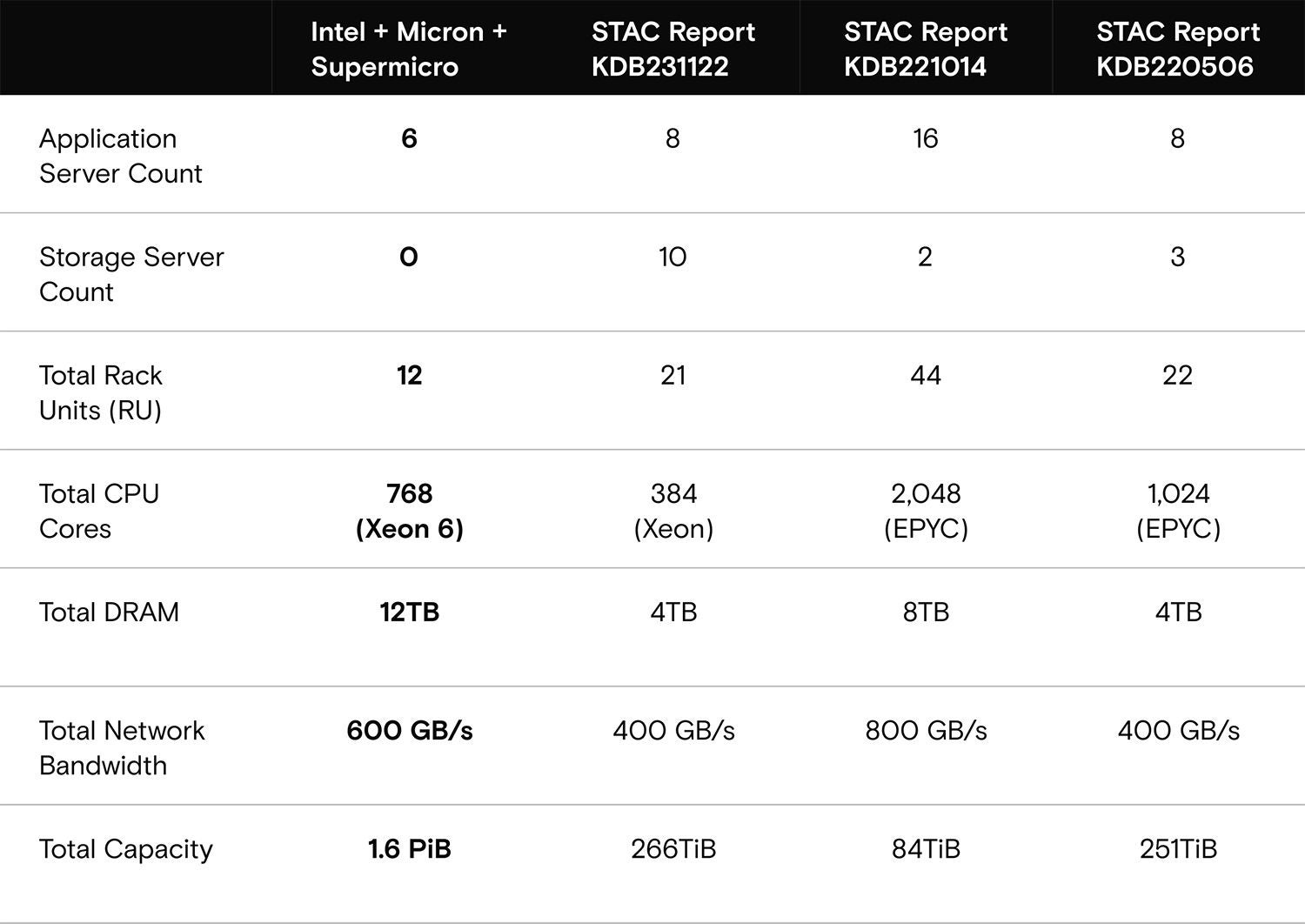

STAC-M3は、さまざまなハードウェア構成でテストされます。コンポーネントの選択は、テスト対象システムを設計するアーキテクトに委ねられています。マイクロンのソリューションでは、主に次の3つの要素に重点を置いています。

- 密度:マイクロンは、はるかに大きなハードウェアフットプリントを必要とするソリューションよりも優れたパフォーマンスを実現できるか?

- TCO:マイクロンのソリューションはコスト効率が高く、適切な規模になっているか?

- スケーラビリティ:マイクロンのソリューションはテスト済みの構成を超えて拡張できるか?

マイクロンのソリューションを、最近公開された次の3つのSTAC-M3レポートと比較しました。

- マイクロンのRUあたりのフットプリントは最小:12RU 対 21RU~44RU

- マイクロンは2番目に少ないCPUコア数を使用:768コア 対 384~2,048コア

- マイクロンのストレージ容量は最大:1.6PiB(1.8PBに相当)対 84TiB~266TiB

- マイクロンのメモリ容量は最大:12TB 対 4TB~8TB

ご覧のとおり、私たちのソリューションは、はるかに小さいソリューションフットプリントで、過去の記録保持システムを大幅に上回るパフォーマンスを発揮しました。

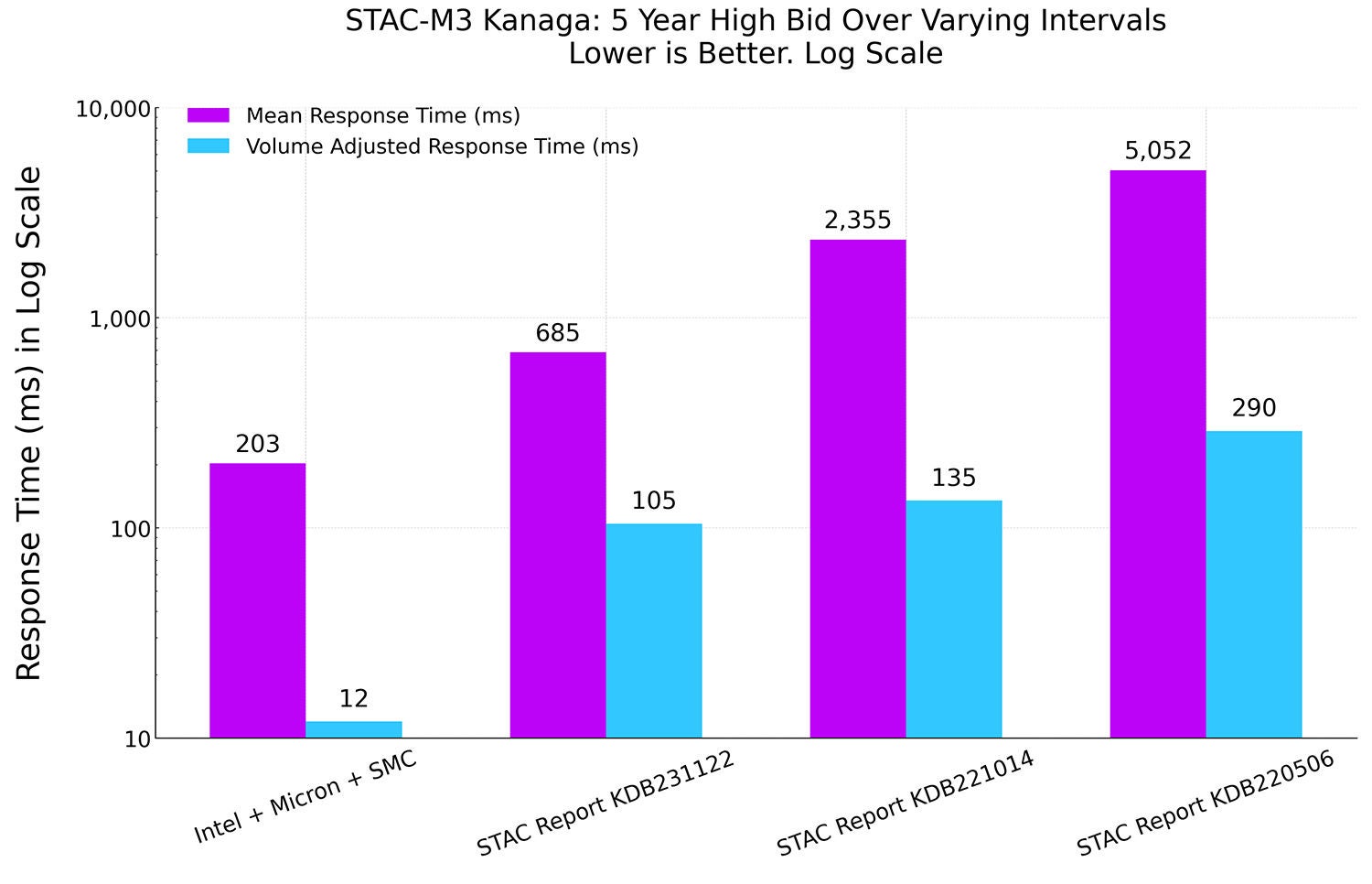

結果:さまざまなインターバルにおける最高買値

5YRHIBIDは、データセット内の特定の年数における、特定の1%のシンボルそれぞれについて、単一スレッドで最高買値を返します。5YRHIBIDの範囲は2011年の初日から2015年の最終日までです。これは、アルゴリズムの計算負荷が低く、読み取り負荷が高いワークロードです。

応答時間の大幅な改善

マイクロンのSTAC-M3ソリューションは、平均応答時間が次点のスコアと比較して70%短縮され、出来高調整応答時間も89%短縮されました。出来高調整応答時間は、データセットのサイズに応じて気配値や取引ごとの応答時間がどのように変化するかを確認するために、応答時間を標準化したものです。上記のグラフは対数スケールですので、ご注意ください。

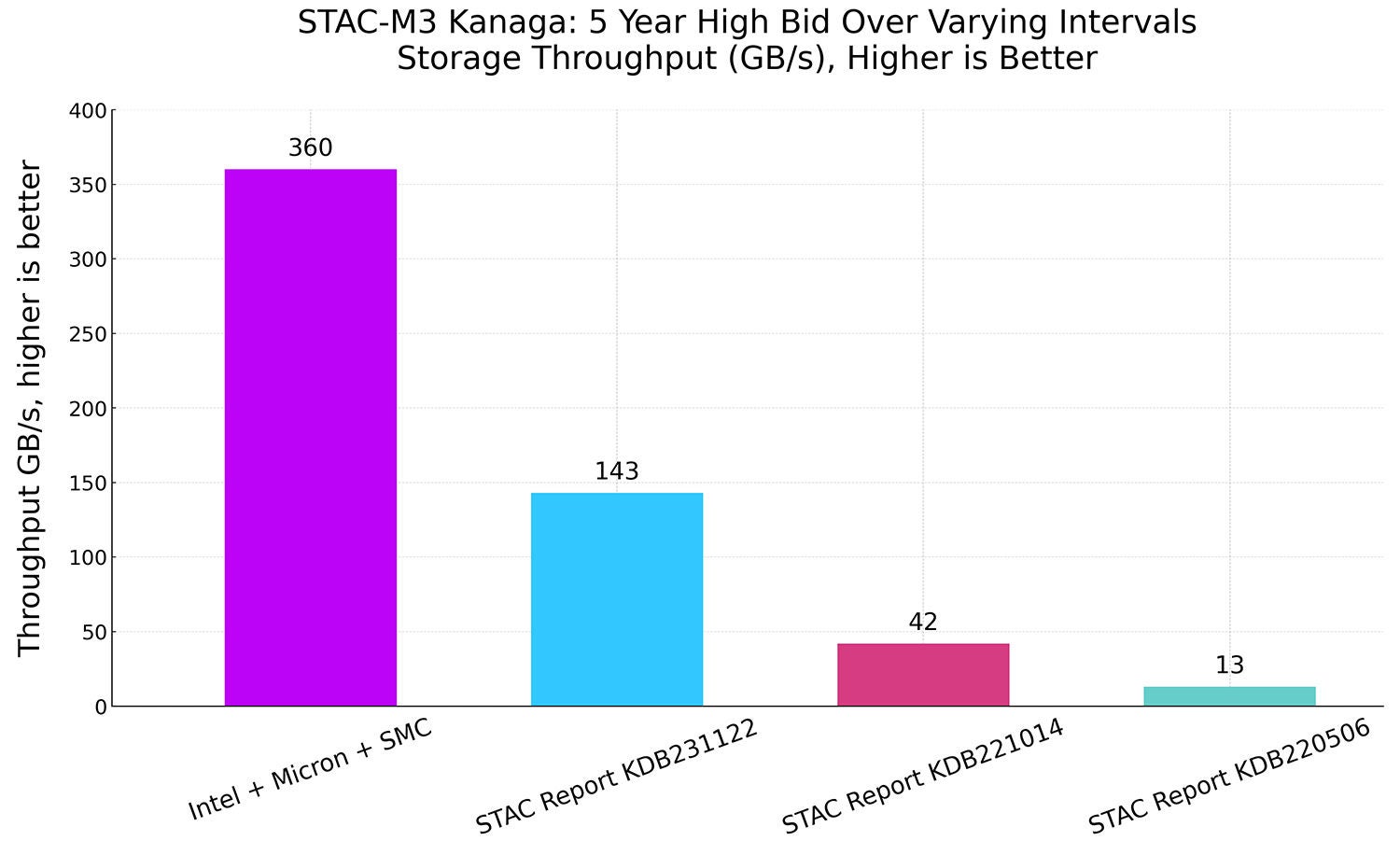

応答時間が大幅に短縮された理由の1つとして、Micron 9550 NVMe SSDによって実現されるストレージスループットが劇的に向上したことが挙げられます。

密度:ストレージパフォーマンスの大幅な向上

マイクロンのソリューションは、はるかに小さいフットプリントで、次点のテストの2.5倍以上のストレージパフォーマンスを実現します。

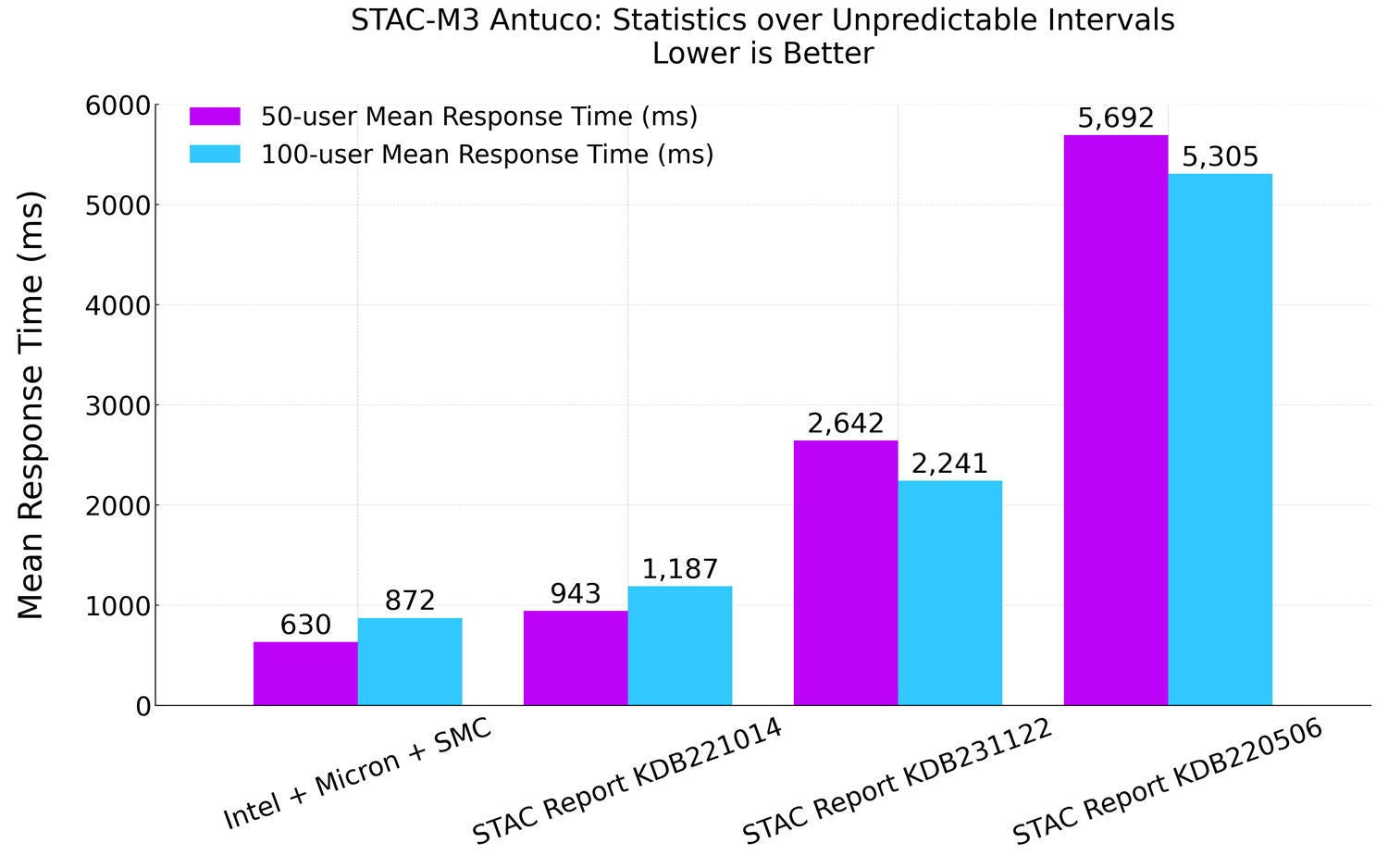

結果:予測不可能なインターバルの統計

STATS-UI:各ユーザーは、取引所、日付、開始時刻の一意の組み合わせをクエリし、100分の範囲で1分ごとに、1つの取引所におけるすべての高取引量シンボルの基本統計を返します。開始時刻は分単位の境界からランダムにオフセットされ、すべての範囲は日付の境界をまたぎます。このワークロードでは、読み取り処理とアルゴリズム計算の両方に多大な負荷がかかります。テストは、高同時実行シナリオを想定し、特に50ユーザーレベルと100ユーザーレベルで、増加する負荷下でのパフォーマンスを測定するように設計されています。

速度:高同時実行での計算・読み取り負荷の高いクエリでこれまでで最速の結果を達成

以前の記録保持システムであるKDB221014と比較すると、マイクロンのソリューションは、計算負荷の高い100ユーザーベンチマークを36%高速に完了しています。CPUコア数は62%削減されています。100ユーザーベンチマークにおけるマイクロンのソリューションの平均応答時間は、以前の記録保持者の50ユーザーベンチマークにおける応答時間よりも短くなっています。

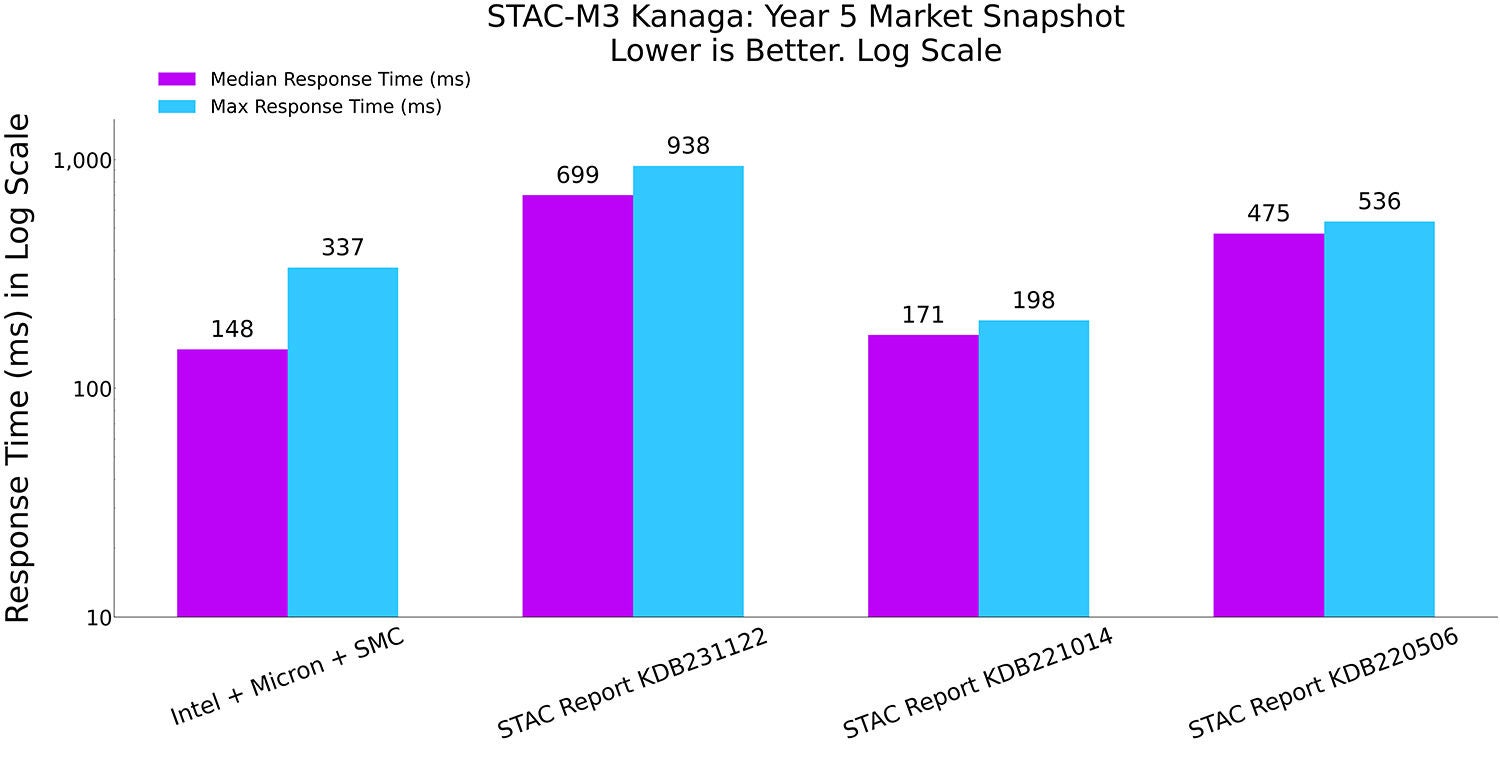

結果:5年間の市場スナップショット

YR5-MKTSNAP:データセットの指定された年の、特定の日付の特定の時刻における、特定の1%の銘柄ごとの最新の気配値と取引価格と数量を返します。YR5-MKTSNAPは、最大のデータセットにおいて2015年の日付と時刻をクエリします。このワークロードは、読み取り負荷が高く、アルゴリズム計算負荷も非常に高くなります。

TCO:より少ないコア数と大幅に少ないラックスペースでトップクラスのパフォーマンスを実現

マイクロンのソリューションは、応答時間の中央値が最も短くなりました。KD2201014レポートでは最大応答時間が最短となっていますが、そのテストでは44RU、2,048個のCPUコアを搭載したのに対し、マイクロンのソリューションでは12RU、768個のCPUコアを搭載しています。比較すると、マイクロンのソリューションはCPUコア数を62%、ラックスペースを73%削減しながら、応答時間の中央値も短縮しています。

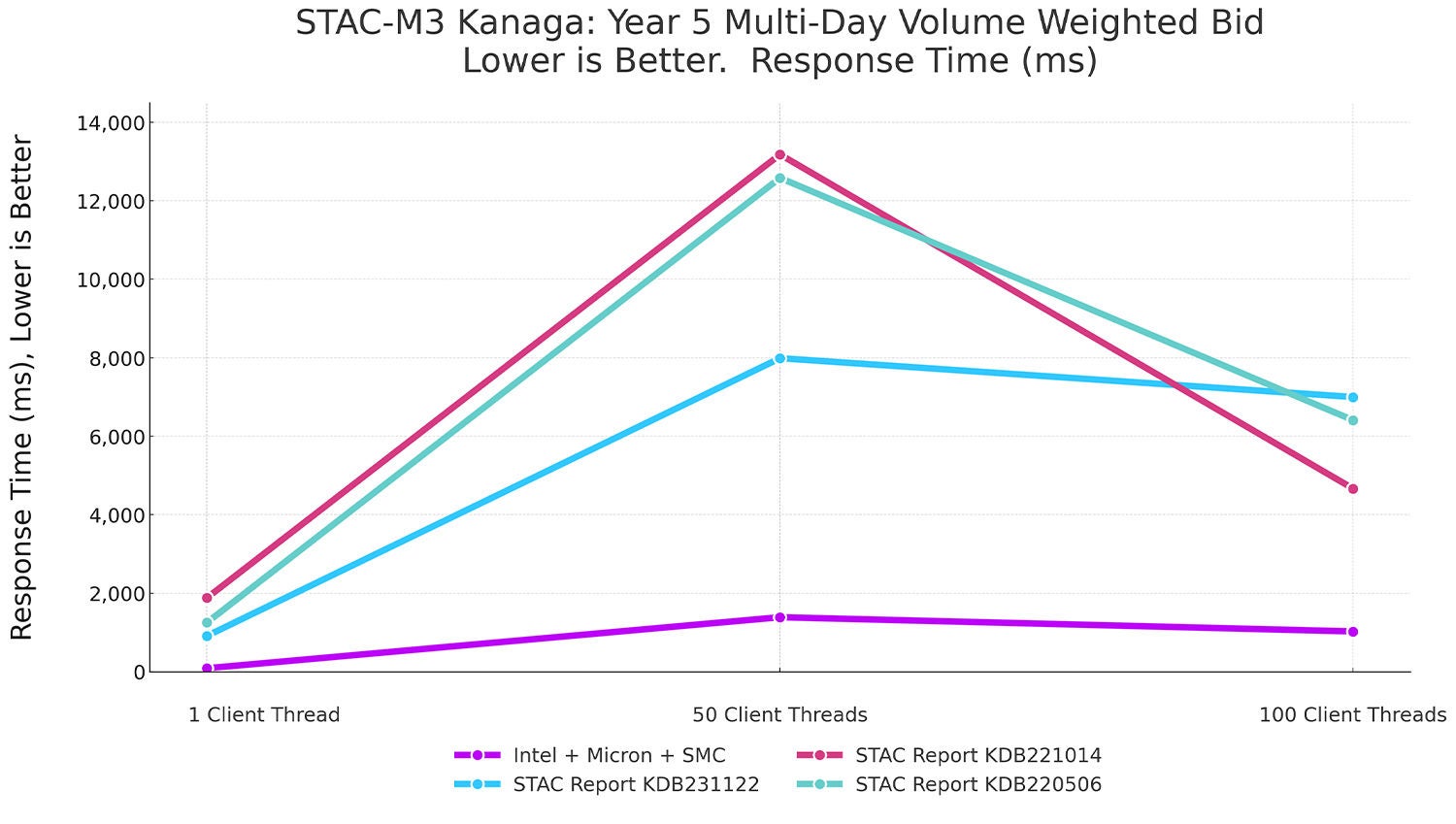

結果:5年目のボリューム加重買値

YR5 VWAB-12D-HO:ランダムに選択された12日間における、同時リクエスト数を変化させた4時間のボリューム加重平均買値を表します。Kanagaデータセットの複数年にわたって実行されており、リクエスト間で大きな重複が生じるように日付とシンボルが選定されています。これは、現実世界でもよく見られるパターンです。これは、アルゴリズムの計算負荷は低く、読み取り負荷が高いテストです。

このテストは非常に重要です。増加するユーザー負荷に対応するソリューションの能力を示しています。

スケーラビリティ:ユーザースケーリングパフォーマンスが10倍

YR5 VWAB-12D-HOの結果は驚異的です。クライアントスレッド数が1の場合、マイクロンのソリューションは公開されているすべてのレポートよりも桁違いに高速です。クライアントスレッド数が50および100の場合でも、それぞれ最大9.5倍、最大7倍と、大きなリードを維持しています。

新たな境地を解き放つ

ベンチマークは、真のフロンティアがどこにあるかを示します。記録はそのフロンティアを前進させ、可能性を再定義し、集団で共有される想像力を刺激します。テクノロジーの飛躍的な進歩によって、速度が劇的に向上すると、新たなユースケースが生まれ、かつては手の届かなかった戦略が現実のものとなります。過去を振り返るのが早ければ早いほど、より遠くまで見通すことができます。

コンピューティング、メモリ、ストレージが調和して動作するように設計されているとき、まさにそれが実現します。フロンティアがさらに前進するのです。STAC-M3は、要求の厳しい金融サービスのワークロードに対応するソリューションの能力を検証することに特化したスイートです。インテル、マイクロン、Supermicroの3社がリソースを結集し、高密度でスケーラブルな最新スタックを実現しました。このスタックは、大規模構成の記録を凌駕するだけでなく、劇的にパフォーマンスを向上させて、記録を更新しました。

さらに詳しく知りたい方は

- STAC-M3レポート:KDB250929 6台のSupermicroペタスケールサーバー(各サーバーにIntel® Xeon® 6767P 2基とMicron™ 9550 MAX NVMe™ SSD 24基を搭載)、KDB+ 4.1

- Supermicroのプレスリリース:Supermicro、インテル、マイクロンが協力し、STAC-M3TM定量取引ベンチマークで記録破りの結果を達成

- Micron 9550 SSD | Micron DDR5メモリ

10月28日にニューヨークで開催されるSTACカンファレンスにお越しの際は、この作品のアーキテクトたちに声をかけてください。

- ケビン・ギルディア氏(インテル、ソリューションアーキテクト)

- ジェイ・ウォルストラム(マイクロン、プリンシパルデータセンターソリューションアーキテクト)

- ウェンデル・ウェンジェン氏(Supermicro、ストレージマーケティング開発担当ディレクター)

- ライアン・メレディス(マイクロン、データセンターワークロードエンジニアリング担当ディレクター)